The recent leaks uncovering controversial data mining operations at the National Security Agency (NSA) reveal the vast amounts of digital information stored by the organization. In fact, the NSA is currently building a new $1.2 billion data center at a National Guard base 26 miles south of Salt Lake City. Early designs of the 1.5 million-square-foot facility, according to the U.S. Army Corps of Engineers bid packet of the project published by Public Intelligence, included four data halls and two substations. It will also require 1.7 million gallons of water each day to cool it and need three days worth of fuel storage available for an emergency power outage. The high-performance computers would take up 100,000 square feet of space and are expected to begin collecting email, phone records, text messages, and other electronic data in September.

The bulk of this digital information comes from private corporations. Digital technology supports almost everything we do. Unless you live under the proverbial rock, you participate in digital transactions. Financial, technology, and communications companies, which make up the “big data” industry, are estimated at a value approaching $100 billion — and data centers are now the main infrastructure behind this modern, digital economy.

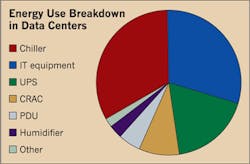

These facilities are also major consumers of energy — some using as much as 100 to 200 times more electricity than standard office spaces — because of the equipment’s demands for both power and cooling (see Figure). Racks of computer servers, which run every hour of every day, can consume up to 300W of electricity for each square foot of floor space.

Does not compute

A recent survey of 300 IT decision makers at large U.S. corporations (each with annual revenue of at least $1 billion or with at least 5,000 employees) by Digital Realty, San Francisco, reveals the average power usage effectiveness (PUE) rating (the measurement of how efficiently data centers use energy to operate computing equipment) increased slightly between 2011 and 2012. The PUE ratio looks at the total energy supplied to a data center, divided by the amount of energy that is actually used by the IT equipment.

A PUE of 2.0 means that for every 2W of power supplied to the data center, only 1W is consumed by computing equipment. The 2.9 rating among the companies surveyed means those firms are consuming an extra 1.9W of power for every 1W that’s used by the IT equipment. The ideal PUE rating is close to 1.0. The companies in a 2011 survey of 1,100 corporations reported an average PUE of 2.8. Even more alarming, figures from the U.S. Environmental Protection Agency showed a 1.9 average PUE in 2007.

The survey comes on the heels of recent announcements by tech giants about the energy-efficiency measures they are taking. Last May, Hewlett-Packard announced plans for its net-zero energy data center, which would cut power use by 30% and dependence on the grid by 80%. Facebook’s Prineville, Ore.-based data center, designed on a simpler less-is-more construction philosophy, features an outside-air evaporative cooling and humidification system, along with a ductless air distribution system that can recirculate hot air or eject it from the building altogether. The company’s Open Compute Project is dedicated to creating extremely efficient computing infrastructure at a lower cost, which involves custom building servers, software, and data centers from the ground up.

Meanwhile, the energy that powers Apple’s data center in Maiden, N.C. — around 20MW at full capacity — includes renewable sources like solar arrays and fuel cells. The facility also relies on an energy-efficient design, including a white cool-roof design, recycled and locally sourced construction materials, and real-time energy monitoring and analytics.

Considering these high-profile efforts to cut energy use in data centers, the increase in the PUE ratio seems surprising. The small jump could be related to the increased demand on capacity. As data center operators virtualize servers and use more capacity, they boost the overall efficiency of equipment operation. However, if power requirements stay the same, the PUE does not change, even though the facility is delivering more services per watt. If a company doubles its utilization capacity, then it has also doubled the efficiency of the data center, and the PUE doesn’t change.

The survey shows the average power load among the companies surveyed increased from 2.1MW in 2011 to 2.6MW in 2013. This correlates with a 0.6kW increase in average power density per rack as companies deploy more powerful servers with better virtualization capabilities. While offering better performance, those servers can also increase on-site cooling demands.

Furthermore, the survey results from Digital Realty aren’t broken out by the size or age of facilities surveyed. Multi-megawatt facilities are not the only data centers. There are smaller distributed IT rooms found in most commercial and industrial facilities. These older, smaller data centers, which also work with smaller budgets, are often slower to upgrade equipment and retrofit mechanical and electrical systems.

The results of the survey are an average of these different categories of data centers. The top consists of the efficient facilities that boast a very low PUE built by the biggest companies; in the middle are the larger and mid-sized companies with a PUE close to the survey’s results; and at the bottom are the smaller players with a poor PUE rating because they don’t have the money to spend on upgrades. Results showed 20% of respondents reported having a PUE of less than 2.0, while 9% had a PUE of 4.0 or greater.

“While a PUE of 2.9 seems terribly inefficient, we view it as being closer to the norm than the extremely low — close to 1.0 — figures reported in the media,” said Jim Smith, Digital Realty’s CTO. “In our view, those figures represent what a very small number of organizations can achieve based on a unique operating model.”

The low-hanging fruit

The survey’s results, which marked a slight increase in PUE can be attributed to older designs and equipment, underutilized assets, and other design and operating issues. “Designing data center operations around an organization’s infrastructure and operations is not a simple task,” said Smith.

In fact, there are several competing factors in data center design. Reliability is probably the most important concern for data center operators. Following closely behind that is security. Both those attributes can lead to inefficiencies, such as when redundancies are built into the design.

But efficiency rates high on its own. “While many data centers are adopting design strategies for increasing energy

efficiency at the risk of reliability, there are many design options that allow data centers to maintain a high reliability while increasing the energy efficiency of the facility,” says Robert J. Yester, P.E., design principal in charge of electrical engineering for mission-critical facilities at Swanson Rink, Denver. “The greatest gains in efficiency are often found in the mechanical design and the actual servers themselves; however, there are many electrical decisions that can impact the energy efficiency of the data center.”

For example, Yester lists medium-voltage distribution and 240V/415V computer power distribution, which can not only reduce energy consumption by up to 2%, but can also lead to a reduction in first costs as well. Electrical equipment, including UPSs and transformers, are also much more energy efficient.

Despite the deceptively high average PUE score, Digital Realty’s survey results indicate increased efforts to improve efficiency. Four out of five respondents said they take steps to keep hot exhaust air from servers mixing with cold air used for cooling, known as hot-aisle or cold-aisle containment. That was up from less than two-thirds in 2011. And 85% use some type of data center infrastructure management software.

“We don’t run into any client who isn’t interested in energy efficiency,” says Christopher Johnston, P.E., senior VP of the Critical Facilities practice of Syska Hennessy Group, Atlanta. “The operating costs of a data center are becoming a larger part of the total cost of owning and operating a data center. We have some clients who are very much concerned about first cost, but very rarely do we run into clients who say, ‘No, I just want to build it as cheaply as I can — I don’t care what it costs to operate.”

Computer equipment may be the biggest investment for data centers, but because of its turnover rate, it may not be the main concern regarding upgrades for efficiency. “Most data center operators are moving new computer equipment in and taking old equipment out on a continuous basis,” says Johnston. “Many of our clients say a piece of computer equipment is in service for only three years, and after that, it’s replaced.”

Therefore, the design must incorporate flexibility. “We create sustainable, modular designs so that, by the time the facility is 20 years old and it’s time to replace or upgrade the UPS equipment, you’ve already had five or six computer hardware changes. There has to be enough flexibility built in so you can accommodate what’s coming in the future.”

Regarding electrical design, the biggest savings can be found in what Johnston refers to as the low-hanging fruit. Syska’s designs minimize the number of levels of transformation in the facility. They also use medium-voltage distribution where possible. But reducing the electrical load for a data center requires a combination of specifying the right equipment and also maintaining it. Analytics software can predict spikes in data center energy demand. It has a module to determine optimal energy expenditure, an execution module for managing consumption in real time, and a verification and reporting module. “We do a lot of facility operations and maintenance consulting,” says Johnston. “We help clients write standard operating procedures.”

The firm also helps its clients model energy use in their data center with predictive software. For security reasons, it’s not a Web-enabled program, but it does help predict energy use under a given set of conditions. “If I set the model up for tomorrow and my energy usage tomorrow is higher than the model indicates it should be, then it gives me a good idea where my problems might be,” says Johnston. “Maybe the cooling towers are not right, or some valves are set wrong. The important thing is to get enough information so you can use the energy consumption figures as a tool to help you reduce your energy consumption.”

Still, there have been recent improvements in electrical equipment, most notably in UPS systems. “We’re definitely seeing more efficient equipment specified as part of the design,” says Johnston. “Twenty years ago, the UPS equipment at peak loading would provide 88% or maybe even 90% efficiency but, because it operated at part load efficiency, we would probably get mid- to high 80s efficiency overall. These days, we’re looking at anywhere between 93% and 98% efficiency overall. That in itself makes major changes.”

Leading the charge

One data center developer — Corporate Office Properties Trust (COPT), Columbia, Md. — is doing its part to design and build very efficient facilities. The COPT DC-6 project incorporates a number of energy-saving architectural designs that earned the facility a Leadership in Energy and Environmental Design (LEED) Gold certification (Photo 1). Among the innovations are a reflective roof, building sidewalls covered with green vegetation, and a chilled-water cooling system. However, its standby power system, made up of five rotary UPS units powered by standby generators and several static UPS units totaling 9MW of uninterruptible power for critical loads, is the standout feature (Photo 2). It is proportionally smaller, saving both energy and construction costs. The standby generators installed in the first phase of the facility’s construction are located in a mechanical building adjacent to the data center. The installation features the use of remote radiators on the gen-sets to free up more space in the mechanical building for switchgear and generators. This design also reduces the amount of outside air required for cooling. Without a mechanical load from the radiator fan, the remote radiators allow the gen-sets to start more quickly.

Room with no view

As industrial occupancies — or as Johnston jokingly refers to them “a womb with no view” — data centers don’t require the same type of temperature regulation as buildings that must accommodate workers. There are few areas within data centers that are designated as people spaces, so the majority of the systems serve the computers, which have very different requirements. “We condition the data halls to keep the computers happy,” says Johnston, who explains that today’s strategy to save energy is to raise the operating temperature in the data hall. “We have some clients operating in the low 90s in the cold aisle during summertime,” he continues. “Other non-people spaces, including spaces such as the UPS and switchgear rooms, also operate at 85°F to 90°F. Those are not people spaces. The technicians might be hot and sweaty for the time they have to be in there, but the switchgear is perfectly happy. So the whole idea is to arrange the cooling to minimize energy use, and, of course, as time goes by, the computer equipment will tolerate warmer and warmer temperatures.”

Until more equipment can tolerate higher temperatures, however, it must be chilled. The cooling systems are usually the second biggest consumers of energy in the data center, after the computer equipment. “We work with the mechanical engineers to get a very efficient cooling system — one that makes the most sense on a particular project,” says Johnston. “Electrical systems are very efficient right now, so if you take cooling out of the situation, there’s no problem in getting 95% to 96% efficiency on the electrical side. That’s not the problem. The cooling has been more the problem lately, but that situation is changing as well.”

The selection of the cooling system depends on several factors. Location, meaning the local climate of where the data center is built, plays a primary role. The cost of electricity is also a major factor. “If you have a data center in California, where the cost of electricity is very high, it makes financial sense to spend extra money on a very efficient cooling system. But if you went to Washington State, where electricity is 2.5 cents a kilowatt hour, a very efficient cooling system might not make financial sense. You would pay a lot more money to build the very efficient system but, since you’re buying cheap electricity, you’ll never get that extra capital investment paid off,” says Johnston.

As a result, there are data center hot spots around the country, including places such as Dallas, North Carolina, New Jersey, and the Midwest, including Omaha. The combination of weather conditions and the price of electricity is the main draw in these locations. Other areas attract big data through proximity to corporate headquarters as well as cheap electricity, such as Silicon Valley and Washington State and Oregon.

A little help

With such high energy consumption, data centers are prime targets for energy-efficiency measures. Fortunately, there are several programs available through the public and private channels. The Federal Energy Management Program (FEMP) helps federal agencies achieve greater data center energy efficiency and offers technical assistance through partnership agreements. FEMP participates in the U.S. Department of Energy’s (DOE) data center initiative, which also includes the DOE Industrial Technologies Program’s Save Energy Now in Data Centers Program and the Lawrence Berkeley National Laboratory. This group develops tools and resources to make data centers more efficient throughout the United States. Specifically, FEMP supports data center efficiency initiatives by encouraging federal agencies to adopt best practices, construct energy-efficient data centers, and educate energy managers and information technology professionals. FEMP has teamed with the General Services Administration (GSA) to develop Quick Start Guide and offer workshops. FEMP also leads the Federal Partnership for Green Data Centers to facilitate dialogue between agencies.

The Energy Star program also offers resources for more energy-efficient data centers. It sponsors free non-technical webinars for facility managers and offers an overview of the top opportunities for efficiency, as well as practical implementation considerations. For new facilities, the DOE offers a best practices guide and also provides a number of data center analysis tools and case studies. The Green Grid recently completed a comprehensive analysis of return on investment and PUE at a data center for installing variable-speed fans, adding baffles and blanking panels, repositioning temperature/humidity sensors, and adjusting temperature set points.

Many electric utilities offer incentives and programs for increasing efficiency in both new and retrofit data center projects. In the Chicago area, ComEd’s program, Smart Ideas for Your Business, can help data center designers and managers identify energy-saving opportunities. Just in its second year, the program has graduated 10 data center projects and has about 20 more in its pipeline.

“We look at capital investment and operational improvements,” says Rob Jericho, senior manager, energy efficiency programs at ComEd. “We look at the different areas that could require some capital investment and combine that with operational improvements to get their energy efficiency approach. Every data center ends up being unique, which is one of the challenges of the program,” says Jericho. “But there are savings in almost all areas. Containment, which is allowing the building to increase its temperature so it can increase the amount of outdoor air it uses to cool the system, is where they usually end up saving the most energy.”

The program works with both new and existing facilities, but stresses early involvement, preferably at the design phase if possible. “Before they do absolutely anything, that’s when we like to get in touch with them,” he says. “When they’re just beginning construction on their data center, we can assist with design of the data center and help bring attention to some energy-efficiency opportunities their design team may not have realized. We make sure they’re getting the right equipment for the right solution, and the contractors that they’re working with are accurately quantifying the savings. So they have a positive experience.”